Hi,

Unable to download Report and Mobile Workers Status. " This site can’t be reached" message is appearing.

Thank you.

Hi,

Unable to download Report and Mobile Workers Status. " This site can’t be reached" message is appearing.

Thank you.

Hi @sirajhassan , sorry you're facing this issue on the local instance. Can you please share replication steps, then someone on the forum can try to investigate?

Thank you Ali,

One couchdb instance is running without warning and error now and I'm planing and preparing fresh copy of Ubuntu 22 VMs for replication.

The steps followed followed to install and replicate the CouchDB:

curl -XGET 172.19.3.35:15984

{"couchdb":"Welcome","version":"3.3.1","git_sha":"1fd50b82a","uuid":"61f66e67e65a525997c23960fb11ef50","features":["access-ready","partitioned","pluggable-storage-engines","reshard","scheduler"],"vendor":{"name":"The Apache Software Foundation"}}

[chttpd]

port = 15984

bind_address = 172.19.3.37

Give ansible user access to couchdb files:

ansible couchdb2 -m user -i /home/administrator/commcare-cloud/environments/echis/inventory.ini -a 'user=ansible groups=couchdb append=yes' -u ansible --become -e @/home/administrator/commcare-cloud/environments/echis/public.yml -e @/home/administrator/commcare-cloud/environments/echis/.generated.yml -e @/home/administrator/commcare-cloud/environments/echis/vault.yml --vault-password-file=/home/administrator/commcare-cloud/src/commcare_cloud/ansible/echo_vault_password.sh '--ssh-common-args=-o UserKnownHostsFile=/home/administrator/commcare-cloud/environments/echis/known_hosts' --diff

172.19.3.37 | SUCCESS => {

"append": true,

"changed": false,

"comment": ",,,",

"group": 3001,

"groups": "couchdb",

"home": "/home/ansible",

"move_home": false,

"name": "ansible",

"shell": "/bin/bash",

"state": "present",

"uid": 1001

}

172.19.3.35 | SUCCESS => {

"append": true,

"changed": false,

"comment": ",,,",

"group": 3001,

"groups": "couchdb",

"home": "/home/ansible",

"move_home": false,

"name": "ansible",

"shell": "/bin/bash",

"state": "present",

"uid": 1001

}

172.19.3.55 | SUCCESS => {

"append": true,

"changed": false,

"comment": ",,,",

"group": 3001,

"groups": "couchdb",

"home": "/home/ansible",

"move_home": false,

"name": "ansible",

"shell": "/bin/bash",

"state": "present",

"uid": 1001

}

172.19.4.50 | SUCCESS => {

"append": true,

"changed": false,

"comment": "",

"group": 3001,

"groups": "couchdb",

"home": "/home/ansible",

"move_home": false,

"name": "ansible",

"shell": "/bin/bash",

"state": "present",

"uid": 1001

}

ansible couchdb2 -m file -i /home/administrator/commcare-cloud/environments/echis/inventory.ini -a 'path=/opt/data/couchdb2/ mode=0755' -u ansible --become -e @/home/administrator/commcare-cloud/environments/echis/public.yml -e @/home/administrator/commcare-cloud/environments/echis/.generated.yml -e @/home/administrator/commcare-cloud/environments/echis/vault.yml --vault-password-file=/home/administrator/commcare-cloud/src/commcare_cloud/ansible/echo_vault_password.sh '--ssh-common-args=-o UserKnownHostsFile=/home/administrator/commcare-cloud/environments/echis/known_hosts' --diff

172.19.4.50 | SUCCESS => {

"changed": false,

"gid": 125,

"group": "couchdb",

"mode": "0755",

"owner": "couchdb",

"path": "/opt/data/couchdb2/",

"size": 4096,

"state": "directory",

"uid": 118

}

172.19.3.37 | SUCCESS => {

"changed": false,

"gid": 125,

"group": "couchdb",

"mode": "0755",

"owner": "couchdb",

"path": "/opt/data/couchdb2/",

"size": 4096,

"state": "directory",

"uid": 118

}

172.19.3.55 | SUCCESS => {

"changed": false,

"gid": 125,

"group": "couchdb",

"mode": "0755",

"owner": "couchdb",

"path": "/opt/data/couchdb2/",

"size": 4096,

"state": "directory",

"uid": 118

}

172.19.3.35 | SUCCESS => {

"changed": false,

"gid": 125,

"group": "couchdb",

"mode": "0755",

"owner": "couchdb",

"path": "/opt/data/couchdb2/",

"size": 4096,

"state": "directory",

"uid": 118

}

Copy file lists to nodes:

ansible all -m shell -i /home/administrator/commcare-cloud/environments/echis/inventory.ini -a '/tmp/file_migration/file_migration_rsync.sh --dry-run' -u ansible --become -e @/home/administrator/commcare-cloud/environments/echis/public.yml -e @/home/administrator/commcare-cloud/environments/echis/.generated.yml -e @/home/administrator/commcare-cloud/environments/echis/vault.yml --vault-password-file=/home/administrator/commcare-cloud/src/commcare_cloud/ansible/echo_vault_password.sh '--ssh-common-args=-o UserKnownHostsFile=/home/administrator/commcare-cloud/environments/echis/known_hosts' --diff --limit=

172.19.3.34 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.3.39 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.50 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.3.54 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.47 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.41 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.3.41 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.3.97 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.3.38 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.40 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.3.31 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.3.42 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.3.75 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.54 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.53 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.55 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.33 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.3.50 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.3.51 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.57 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.3.52 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.3.76 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.3.43 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.3.44 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.3.77 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.3.79 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.3.48 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.3.36 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.43 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.37 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.61 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.62 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.60 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.48 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.59 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.71 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.72 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.36 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.46 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

172.19.4.63 | FAILED | rc=127 >>

/bin/sh: 1: /tmp/file_migration/file_migration_rsync.sh: not foundnon-zero return code

CoucDB hosts in the inventory.ini file:

[couchdb2:children]

echis_server32

echis_server34

echis_server14

echis_server55

Hi @sirajhassan, thanks for reaching out.

So the report download and couch cluster issues are two different issues. (Can you open a new issue for the Couch issue please?)

Regarding the report download issue:

Regarding the Couch issue:

Thank you Chris for your response.

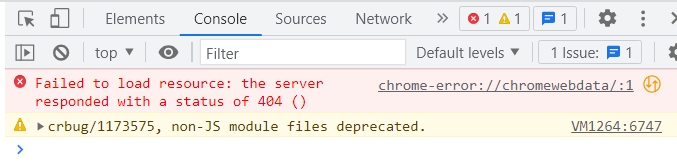

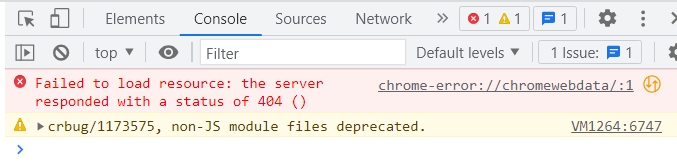

Report download issue:

Couchdb issue:

I posted the issue here

Interesting. So either the report has expired or it is missing for some reason. This report will stay in cache for 24 hours. You're probably using Redis for the cache backend; please confirm that it is up and running, healthy and not full.

To confirm that you're using Redis for this, you can do the following:

cchq {your env} django-manage shell

from django.conf import settings

getattr(settings, 'SOIL_DEFAULT_CACHE', DEFAULT_CACHE_ALIAS)

This will return the default cache backend that is used here. From this line it is clear that we don't overwrite the cache backend, so it will use the default (SOIL_DEFAULT_CACHE) when calling set_task two lines below that.

If you're sure that the report should not be expired yet (so if you regenerate it and still face this issue), then I'd suggest watching the django logs to see if there aren't any issues happening that might be masked (although I doubt this is the case)