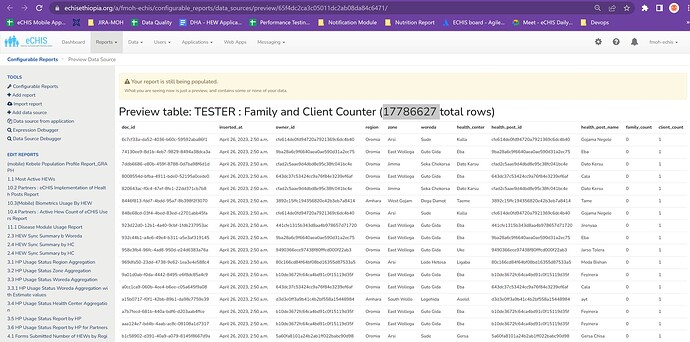

We have conducted tests on the asynchronous implementation of the UCR improvement. Upon initiating the build, it took 8 days to reach a count of 17 million. However, it has remained stagnant at that number for the last 10 days. The expected final count is supposed to exceed 25 million. What could be the cause of this situation?

Hi Andinet,

It might be possible that the Celery task responsible for populating the UCR datasource isn't running. Could you please confirm by running the check_services management command on the Django machine?

Hi @zandre_eng,

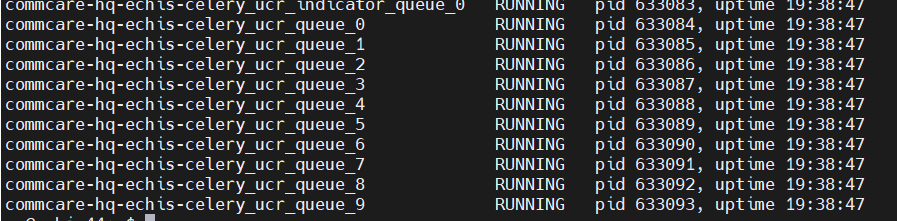

The Celery service is running.

SUCCESS (Took 0.11s) kafka : Kafka seems to be in order

SUCCESS (Took 0.01s) redis : Redis is up and using 863.35M memory

SUCCESS (Took 0.03s) postgres : default:commcarehq:OK auditcare:commcarehq_auditcare:OK p1:commcarehq_p1:OK p2:commcarehq_p2:OK p3:commcarehq_p3:OK p4:commcarehq_p4:OK p5:commcarehq_p5:OK p6:commcarehq_p6:OK p7:commcarehq_p7:OK p8:commcarehq_p8:OK proxy:commcarehq_proxy:OK synclogs:commcarehq_synclogs:OK ucr:commcarehq_ucr:OK Successfully got a user from postgres

SUCCESS (Took 0.02s) couch : Successfully queried an arbitrary couch view

SUCCESS (Took 0.06s) celery : OK

SUCCESS (Took 0.20s) elasticsearch : Successfully sent a doc to ES and read it back

SUCCESS (Took 0.34s) blobdb : Successfully saved a file to the blobdb

SUCCESS (Took 0.01s) formplayer : Formplayer returned a 200 status code: https://www.echisethiopia.org/formplayer/serverup

SUCCESS (Took 0.00s) rabbitmq : RabbitMQ OK

I suspect it might be that the celery task building the UCR could have hit an error and was killed. If you have a look through the Celery logs (specifcally the celery_background_log and celery_ucr_queue), are there any errors from around the time that the UCR building got stuck?

Below error and warning messages are found in the celery_ucr_queue logs. Are they helpful to investigate the issue?

Unfortunately, I couldn't get celery_background_log file.

2023-05-14 11:33:22,268 ERROR [celery.utils.dispatch.signal] Signal handler <function celery_add_time_sent at 0x7f05793bea60> raised: ConnectionError('Error 111 connecting to 172.19.4.33:6379. Connection refused.')

Traceback (most recent call last):

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django_redis/cache.py", line 27, in _decorator

return method(self, *args, **kwargs)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django_redis/cache.py", line 76, in set

return self.client.set(*args, **kwargs)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django_redis/client/default.py", line 166, in set

raise ConnectionInterrupted(connection=client) from e

django_redis.exceptions.ConnectionInterrupted: Redis ConnectionError: Error 111 connecting to 172.19.4.33:6379. Connection refused.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/celery/utils/dispatch/signal.py", line 276, in send

response = receiver(signal=self, sender=sender, **named)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/corehq/celery_monitoring/signals.py", line 22, in celery_add_time_sent

TimeToStartTimer(task_id).start_timing(eta)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/corehq/celery_monitoring/signals.py", line 110, in start_timing

cache.set(self._cache_key, eta or datetime.datetime.utcnow(), timeout=3 * 24 * 60 * 60)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django_redis/cache.py", line 34, in _decorator

raise e.__cause__

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django_redis/client/default.py", line 156, in set

return bool(client.set(nkey, nvalue, nx=nx, px=timeout, xx=xx))

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/redis/commands/core.py", line 2302, in set

return self.execute_command("SET", *pieces, **options)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/sentry_sdk/integrations/redis.py", line 170, in sentry_patched_execute_command

return old_execute_command(self, name, *args, **kwargs)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/redis/client.py", line 1255, in execute_command

conn = self.connection or pool.get_connection(command_name, **options)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/redis/connection.py", line 1442, in get_connection

connection.connect()

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/redis/connection.py", line 704, in connect

raise ConnectionError(self._error_message(e))

redis.exceptions.ConnectionError: Error 111 connecting to 172.19.4.33:6379. Connection refused.

2023-05-14 11:36:23,159 ERROR [celery.utils.dispatch.signal] Signal handler <function update_celery_state at 0x7f056fa189d0> raised: OperationalError('connection to server at "172.19.3.36", port 6432 failed: FATAL: client_login_timeout (server down)\n')

Traceback (most recent call last):

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django_redis/cache.py", line 27, in _decorator

return method(self, *args, **kwargs)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django_redis/cache.py", line 94, in _get

return self.client.get(key, default=default, version=version, client=client)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django_redis/client/default.py", line 222, in get

raise ConnectionInterrupted(connection=client) from e

django_redis.exceptions.ConnectionInterrupted: Redis ConnectionError: Error 111 connecting to 172.19.4.33:6379. Connection refused.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/celery/app/trace.py", line 451, in trace_task

R = retval = fun(*args, **kwargs)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/sentry_sdk/integrations/celery.py", line 229, in _inner

reraise(*exc_info)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/sentry_sdk/_compat.py", line 60, in reraise

raise value

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/sentry_sdk/integrations/celery.py", line 224, in _inner

return f(*args, **kwargs)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/celery/app/trace.py", line 734, in __protected_call__

return self.run(*args, **kwargs)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/corehq/celery_monitoring/heartbeat.py", line 118, in heartbeat

self.get_and_report_blockage_duration()

File "/home/cchq/www/echis/releases/2023-05-13_11.49/corehq/celery_monitoring/heartbeat.py", line 74, in get_and_report_blockage_duration

blockage_duration = self.get_blockage_duration()

File "/home/cchq/www/echis/releases/2023-05-13_11.49/corehq/celery_monitoring/heartbeat.py", line 70, in get_blockage_duration

return max(datetime.datetime.utcnow() - self.get_last_seen() - HEARTBEAT_FREQUENCY,

File "/home/cchq/www/echis/releases/2023-05-13_11.49/corehq/celery_monitoring/heartbeat.py", line 49, in get_last_seen

value = self._heartbeat_cache.get()

File "/home/cchq/www/echis/releases/2023-05-13_11.49/corehq/celery_monitoring/heartbeat.py", line 27, in get

return cache.get(self._cache_key())

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django_redis/cache.py", line 87, in get

value = self._get(key, default, version, client)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django_redis/cache.py", line 34, in _decorator

raise e.__cause__

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django_redis/client/default.py", line 220, in get

value = client.get(key)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/redis/commands/core.py", line 1790, in get

return self.execute_command("GET", name)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/sentry_sdk/integrations/redis.py", line 170, in sentry_patched_execute_command

return old_execute_command(self, name, *args, **kwargs)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/redis/client.py", line 1255, in execute_command

conn = self.connection or pool.get_connection(command_name, **options)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/redis/connection.py", line 1442, in get_connection

connection.connect()

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/redis/connection.py", line 704, in connect

raise ConnectionError(self._error_message(e))

redis.exceptions.ConnectionError: Error 111 connecting to 172.19.4.33:6379. Connection refused.

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/db/backends/base/base.py", line 219, in ensure_connection

self.connect()

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/sentry_sdk/integrations/django/__init__.py", line 605, in connect

return real_connect(self)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/utils/asyncio.py", line 33, in inner

return func(*args, **kwargs)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/db/backends/base/base.py", line 200, in connect

self.connection = self.get_new_connection(conn_params)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/utils/asyncio.py", line 33, in inner

return func(*args, **kwargs)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/db/backends/postgresql/base.py", line 187, in get_new_connection

connection = Database.connect(**conn_params)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/psycopg2/__init__.py", line 127, in connect

conn = _connect(dsn, connection_factory=connection_factory, **kwasync)

psycopg2.OperationalError: connection to server at "172.19.3.36", port 6432 failed: FATAL: client_login_timeout (server down)

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/celery/utils/dispatch/signal.py", line 276, in send

response = receiver(signal=self, sender=sender, **named)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/corehq/ex-submodules/casexml/apps/phone/tasks.py", line 68, in update_celery_state

backend.store_result(headers['id'], None, ASYNC_RESTORE_SENT)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/celery/backends/base.py", line 528, in store_result

self._store_result(task_id, result, state, traceback,

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django_celery_results/backends/database.py", line 132, in _store_result

self.TaskModel._default_manager.store_result(**task_props)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django_celery_results/managers.py", line 43, in _inner

return fun(*args, **kwargs)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django_celery_results/managers.py", line 168, in store_result

obj, created = self.using(using).get_or_create(task_id=task_id,

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/db/models/query.py", line 581, in get_or_create

return self.get(**kwargs), False

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/db/models/query.py", line 431, in get

num = len(clone)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/db/models/query.py", line 262, in __len__

self._fetch_all()

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/db/models/query.py", line 1324, in _fetch_all

self._result_cache = list(self._iterable_class(self))

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/db/models/query.py", line 51, in __iter__

results = compiler.execute_sql(chunked_fetch=self.chunked_fetch, chunk_size=self.chunk_size)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/db/models/sql/compiler.py", line 1173, in execute_sql

cursor = self.connection.cursor()

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/utils/asyncio.py", line 33, in inner

return func(*args, **kwargs)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/db/backends/base/base.py", line 259, in cursor

return self._cursor()

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/db/backends/base/base.py", line 235, in _cursor

self.ensure_connection()

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/utils/asyncio.py", line 33, in inner

return func(*args, **kwargs)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/db/backends/base/base.py", line 219, in ensure_connection

self.connect()

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/db/utils.py", line 90, in __exit__

raise dj_exc_value.with_traceback(traceback) from exc_value

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/db/backends/base/base.py", line 219, in ensure_connection

self.connect()

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/sentry_sdk/integrations/django/__init__.py", line 605, in connect

return real_connect(self)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/utils/asyncio.py", line 33, in inner

return func(*args, **kwargs)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/db/backends/base/base.py", line 200, in connect

self.connection = self.get_new_connection(conn_params)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/utils/asyncio.py", line 33, in inner

return func(*args, **kwargs)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/django/db/backends/postgresql/base.py", line 187, in get_new_connection

connection = Database.connect(**conn_params)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/psycopg2/__init__.py", line 127, in connect

conn = _connect(dsn, connection_factory=connection_factory, **kwasync)

django.db.utils.OperationalError: connection to server at "172.19.3.36", port 6432 failed: FATAL: client_login_timeout (server down)

2023-05-14 11:36:23,727 WARNING [celery.worker.consumer.consumer] consumer: Connection to broker lost. Trying to re-establish the connection...

Traceback (most recent call last):

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/celery/worker/consumer/consumer.py", line 332, in start

blueprint.start(self)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/celery/bootsteps.py", line 116, in start

step.start(parent)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/celery/worker/consumer/consumer.py", line 628, in start

c.loop(*c.loop_args())

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/celery/worker/loops.py", line 130, in synloop

connection.drain_events(timeout=2.0)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/kombu/connection.py", line 316, in drain_events

return self.transport.drain_events(self.connection, **kwargs)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/kombu/transport/pyamqp.py", line 169, in drain_events

return connection.drain_events(**kwargs)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/amqp/connection.py", line 525, in drain_events

while not self.blocking_read(timeout):

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/amqp/connection.py", line 530, in blocking_read

frame = self.transport.read_frame()

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/amqp/transport.py", line 294, in read_frame

frame_header = read(7, True)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/amqp/transport.py", line 635, in _read

raise OSError('Server unexpectedly closed connection')

OSError: Server unexpectedly closed connection

2023-05-14 11:36:23,729 WARNING [py.warnings] /home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/celery/worker/consumer/consumer.py:367: CPendingDeprecationWarning:

In Celery 5.1 we introduced an optional breaking change which

on connection loss cancels all currently executed tasks with late acknowledgement enabled.

These tasks cannot be acknowledged as the connection is gone, and the tasks are automatically redelivered back to the queue.

You can enable this behavior using the worker_cancel_long_running_tasks_on_connection_loss setting.

In Celery 5.1 it is set to False by default. The setting will be set to True by default in Celery 6.0.

warnings.warn(CANCEL_TASKS_BY_DEFAULT, CPendingDeprecationWarning)

2023-05-14 11:36:24,973 CRITICAL [celery.worker.request] Couldn't ack 47, reason:RecoverableConnectionError(None, 'connection already closed', None, '')

Traceback (most recent call last):

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/kombu/message.py", line 128, in ack_log_error

self.ack(multiple=multiple)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/kombu/message.py", line 123, in ack

self.channel.basic_ack(self.delivery_tag, multiple=multiple)

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/amqp/channel.py", line 1407, in basic_ack

return self.send_method(

File "/home/cchq/www/echis/releases/2023-05-13_11.49/python_env-3.9/lib/python3.9/site-packages/amqp/abstract_channel.py", line 67, in send_method

raise RecoverableConnectionError('connection already closed')

Thank you,

Hi Siraj,

Thanks for the logs. From the error messages, it looks like Celery tasks are unable to connect to 172.19.3.36 (PgBouncer) and 172.19.4.33 (Redis). It is a bit weird, because obviously all the services appear to be OK.

Could it be an intermittent connection problem?

Hi Norman,

I think so, it must be an intermittent error. The PgBouncer and Redis ports are reachable from the celery servers.

This is the other error found from the celery log.

Error: Invalid value for '-A' / '--app':

Unable to load celery application.

While trying to load the module corehq the following error occurred:

Traceback (most recent call last):

File "/home/cchq/www/echis/releases/2023-04-15_22.01/python_env-3.9/lib/python3.9/site-packages/celery/app/utils.py", line 390, in find_app

found = sym.app

AttributeError: module 'corehq' has no attribute 'app'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/cchq/www/echis/releases/2023-04-15_22.01/python_env-3.9/lib/python3.9/site-packages/celery/app/utils.py", line 395, in find_app

found = sym.celery

AttributeError: module 'corehq' has no attribute 'celery'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/cchq/www/echis/releases/2023-04-15_22.01/python_env-3.9/lib/python3.9/site-packages/celery/app/utils.py", line 384, in find_app

sym = symbol_by_name(app, imp=imp)

File "/home/cchq/www/echis/releases/2023-04-15_22.01/python_env-3.9/lib/python3.9/site-packages/kombu/utils/imports.py", line 61, in symbol_by_name

return getattr(module, cls_name) if cls_name else module

AttributeError: module 'corehq' has no attribute 'celery'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/cchq/www/echis/releases/2023-04-15_22.01/python_env-3.9/lib/python3.9/site-packages/celery/bin/celery.py", line 57, in convert

return find_app(value)

File "/home/cchq/www/echis/releases/2023-04-15_22.01/python_env-3.9/lib/python3.9/site-packages/celery/app/utils.py", line 402, in find_app

return find_app(

File "/home/cchq/www/echis/releases/2023-04-15_22.01/python_env-3.9/lib/python3.9/site-packages/celery/app/utils.py", line 387, in find_app

sym = imp(app)

File "/home/cchq/www/echis/releases/2023-04-15_22.01/python_env-3.9/lib/python3.9/site-packages/celery/utils/imports.py", line 105, in import_from_cwd

return imp(module, package=package)

File "/usr/local/lib/python3.9/importlib/__init__.py", line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "<frozen importlib._bootstrap>", line 1030, in _gcd_import

File "<frozen importlib._bootstrap>", line 1007, in _find_and_load

File "<frozen importlib._bootstrap>", line 986, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 680, in _load_unlocked

File "<frozen importlib._bootstrap_external>", line 790, in exec_module

File "<frozen importlib._bootstrap>", line 228, in _call_with_frames_removed

File "/home/cchq/www/echis/releases/2023-04-15_22.01/corehq/celery.py", line 16, in <module>

django.setup() # corehq.apps.celery.Config creates the app

File "/home/cchq/www/echis/releases/2023-04-15_22.01/python_env-3.9/lib/python3.9/site-packages/django/__init__.py", line 19, in setup

configure_logging(settings.LOGGING_CONFIG, settings.LOGGING)

File "/home/cchq/www/echis/releases/2023-04-15_22.01/python_env-3.9/lib/python3.9/site-packages/django/conf/__init__.py", line 82, in __getattr__

self._setup(name)

File "/home/cchq/www/echis/releases/2023-04-15_22.01/python_env-3.9/lib/python3.9/site-packages/django/conf/__init__.py", line 69, in _setup

self._wrapped = Settings(settings_module)

File "/home/cchq/www/echis/releases/2023-04-15_22.01/python_env-3.9/lib/python3.9/site-packages/django/conf/__init__.py", line 170, in __init__

mod = importlib.import_module(self.SETTINGS_MODULE)

File "/usr/local/lib/python3.9/importlib/__init__.py", line 127, in import_module

return _bootstrap._gcd_import(name[level:], package, level)

File "<frozen importlib._bootstrap>", line 1030, in _gcd_import

File "<frozen importlib._bootstrap>", line 1007, in _find_and_load

File "<frozen importlib._bootstrap>", line 986, in _find_and_load_unlocked

File "<frozen importlib._bootstrap>", line 680, in _load_unlocked

File "<frozen importlib._bootstrap_external>", line 790, in exec_module

File "<frozen importlib._bootstrap>", line 228, in _call_with_frames_removed

File "/home/cchq/www/echis/releases/2023-04-15_22.01/settings.py", line 1604, in <module>

SHARED_DRIVE_CONF = helper.SharedDriveConfiguration(

File "/home/cchq/www/echis/releases/2023-04-15_22.01/settingshelper.py", line 25, in __init__

self._restore_dir = self._init_dir(restore_dir)

File "/home/cchq/www/echis/releases/2023-04-15_22.01/settingshelper.py", line 36, in _init_dir

os.mkdir(path)

PermissionError: [Errno 13] Permission denied: '/mnt/shared_echis/restore_payloads'

Hi Siraj,

I think what's happening here is that Celery is trying to start on a machine where "/mnt/shared_echis" is not mounted, or is not mounted correctly, or doesn't have the permissions that would allow the cchq user to create a "restore_payloads" directory in it.

Is that possible?

Maybe the machine is not in the right group/groups in inventory.ini, and "/mnt/shared_echis" was not set up?

Yes, you are right Norman. This was occurred when I ran ap deploy_shared_dir.yml --tags=nfs --limit=< celery node> instead of shared_dir_host.

Wanted to show these are the only errors found in the log file.

Ah! Thanks for clarifying, Siraj.

Just to make sure I understand where we are at with this issue:

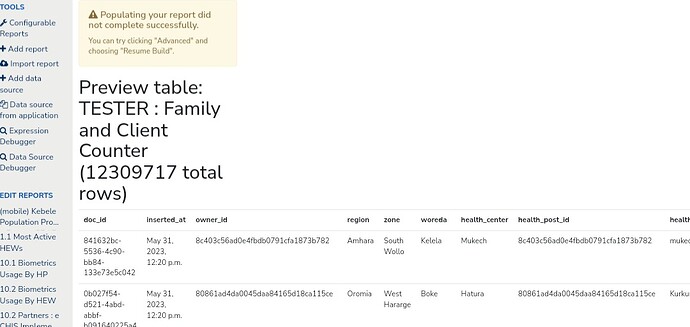

- The "Preview Data Source" page says that the report is still being populated, but that is not true.

- The worker/workers populating the data source have stopped running, possibly because they temporarily lost connection to the Postgres/PgBouncer host and the Redis host.

- All services are currently running fine.

Does that sound correct?

If so, do these follow-up steps sound right?

- The message "Your report is still being populated" should be fixed, so that it accurately represents the current status---that the task failed.

- The task should be restarted.

Hi @andyasne and @sirajhassan

On the "Edit Data Source" page, try clicking the "Advanced" button and choosing "Resume Build". CommCare HQ may be able to continue building the data source from where it left off.

We are working to improve how we determine when a data source rebuild has been interrupted, so HQ can give the user accurate information.

Thanks,

Norman

Hi @andyasne and @sirajhassan

I just wanted to close the loop on this. A code change has been made to CommCare HQ, and deployed to our production environment, that will inform users when a UCR rebuild has failed, so that they can take corrective action.

Thanks,

Norman

Hi Norman,

We started it to re-build and applying your recommendations. Will get back to you to let you know the output.

Thank you.

hi @sirajhassan , did this resolve?

Hi Ali,

Not yet. The record was incrementing over 1 million per day before 12 million, then dramatically declined (it shows less than 3 thousand rows difference per day).

It is still populating, but it is very slow.

Thank you ,

Hi Siraj,

Based on that notification ...

... I think the rebuild stopped before it was complete.

I think the 3000 rows per day are from new form submissions/cases being added to the data source as they arrive.

You might need to try "Resume Build", as the notification suggests.

Thank you Norman, we will try to rebuild.