On my system I have ~/.bash_profile as on yours. What's different is ~/.profile, on mine it's contents are:

# ~/.profile: executed by the command interpreter for login shells.

# This file is not read by bash(1), if ~/.bash_profile or ~/.bash_login

# exists.

# see /usr/share/doc/bash/examples/startup-files for examples.

# the files are located in the bash-doc package.

# the default umask is set in /etc/profile; for setting the umask

# for ssh logins, install and configure the libpam-umask package.

#umask 022

# if running bash

if [ -n "$BASH_VERSION" ]; then

# include .bashrc if it exists

if [ -f "$HOME/.bashrc" ]; then

. "$HOME/.bashrc"

fi

fi

# set PATH so it includes user's private bin if it exists

if [ -d "$HOME/bin" ] ; then

PATH="$HOME/bin:$PATH"

fi

[ -t 1 ] && source ~/init-ansible

I assume the above is the default for Ubuntu 18.04 - I don't have any reference to ~/init-ansible

~/init-ansible is indeed a symlink to ~/environments/monolith/commcare-cloud/control/init.sh

I'm following instructions for the setup here: http://dimagi.github.io/commcare-cloud/setup/new_environment.html

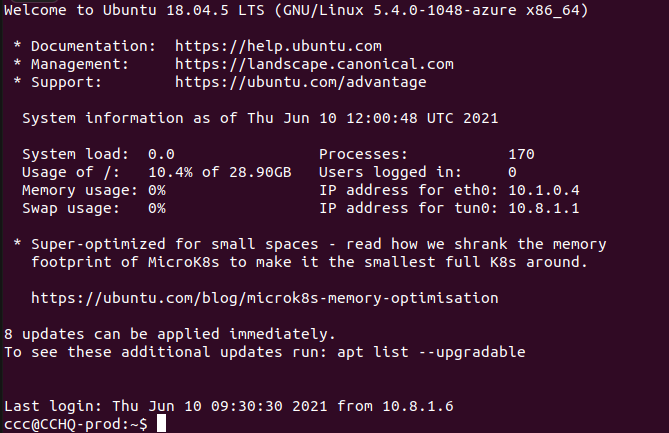

Here's the prompt right after logging in:

And running ~/.commcare-cloud/load_config.sh produces no output at all.

If I run [ -t 1 ] && source ~/init-ansible from bash after logging in, I get:

Downloading dependencies from galaxy and pip

ansible-galaxy install -f -r /home/ccc/commcare-cloud/src/commcare_cloud/ansible/requirements.yml

ERROR: Cannot install -r /tmp/tmpj1sgcdup (line 1) and urllib3==1.26.5 because these package versions have conflicting dependencies.

ERROR: ResolutionImpossible: for help visit https://pip.pypa.io/en/latest/user_guide/#fixing-conflicting-dependencies

Traceback (most recent call last):

File "/home/ccc/.virtualenvs/cchq/bin/pip-sync", line 8, in <module>

sys.exit(cli())

File "/home/ccc/.virtualenvs/cchq/lib/python3.6/site-packages/click/core.py", line 1137, in __call__

return self.main(*args, **kwargs)

File "/home/ccc/.virtualenvs/cchq/lib/python3.6/site-packages/click/core.py", line 1062, in main

rv = self.invoke(ctx)

File "/home/ccc/.virtualenvs/cchq/lib/python3.6/site-packages/click/core.py", line 1404, in invoke

return ctx.invoke(self.callback, **ctx.params)

File "/home/ccc/.virtualenvs/cchq/lib/python3.6/site-packages/click/core.py", line 763, in invoke

return __callback(*args, **kwargs)

File "/home/ccc/.virtualenvs/cchq/lib/python3.6/site-packages/piptools/scripts/sync.py", line 151, in cli

ask=ask,

File "/home/ccc/.virtualenvs/cchq/lib/python3.6/site-packages/piptools/sync.py", line 256, in sync

check=True,

File "/usr/lib/python3.6/subprocess.py", line 438, in run

output=stdout, stderr=stderr)

subprocess.CalledProcessError: Command '['/home/ccc/.virtualenvs/cchq/bin/python', '-m', 'pip', 'install', '-r', '/tmp/tmpj1sgcdup', '-q']' returned non-zero exit status 1.

[WARNING]: - dependency andrewrothstein.couchdb (v2.1.4) (v2.1.4) from role andrewrothstein.couchdb-cluster differs from already installed version

(fcb957ed038ab1c4fddcfef6b9c7617dcdeec9b7), skipping

[WARNING]: - dependency ANXS.cron (None) from role tmpreaper differs from already installed version (v1.0.2), skipping

/home/ccc

[1] Done { COMMCARE=; cd ${COMMCARE_CLOUD_REPO}; pip install --quiet --upgrade pip-tools; pip-sync --quiet requirements.txt; pip install --quiet --editable .; cd -; }

[2]- Done COMMCARE= pip install --quiet --upgrade pip

-bash: wait: %2: no such job

[WARNING]: - dependency sansible.java (None) from role sansible.logstash differs from already installed version (v2.1.4), skipping

[WARNING]: - dependency sansible.users_and_groups (None) from role sansible.logstash differs from already installed version (v2.0.5), skipping

ansible-galaxy collection install -f -r /home/ccc/commcare-cloud/src/commcare_cloud/ansible/requirements.yml

To finish first-time installation, run `manage-commcare-cloud configure`

[3]+ Done COMMCARE= manage-commcare-cloud install

✓ origin already set to https://github.com/dimagi/commcare-cloud.git

✗ /home/ccc/commcare-cloud/src/commcare_cloud/fab/config.py does not exist and suitable location to copy it from was not found.

This file is just a convenience, so this is a non-critical error.

If you have fab/config.py in a previous location, then copy it to /home/ccc/commcare-cloud/src/commcare_cloud/fab/config.py.

/home/ccc

Welcome to commcare-cloud

Available commands:

update-code - update the commcare-cloud repositories (safely)

source /home/ccc/.virtualenvs/cchq/bin/activate - activate the ansible virtual environment

ansible-deploy-control [environment] - deploy changes to users on this control machine

commcare-cloud - CLI wrapper for ansible.

See commcare-cloud -h for more details.

See commcare-cloud <env> <command> -h for command details.

Thanks

Ed